THE aiHSF BREAKTHROUGH

A New Way to Understand AI’s Role in Human Stability and Human Survival

By Matt Hasan, Ph.D.

Founder, The AI Humanist Movement

INTRODUCTION: THE IDEA WE MISSED BECAUSE WE WERE ASKING THE WRONG QUESTIONS

For more than a decade, society has been locked in a repetitive cycle of questions about artificial intelligence. Can it think like us? Will it automate work? Will it undermine democracy? Will it become dangerous? Will it enhance creativity or replace it? These questions are not trivial, but they are familiar to the point of stagnation. They circle endlessly around capability, safety, ethics, and control – important lenses but ultimately incomplete ones. What they do not do is illuminate the deeper, more urgent truth about the role AI is already beginning to play in human life.

Across thousands of debates, research papers, and policy hearings, the world has largely overlooked something that became startlingly clear to me through lived experience rather than theoretical study. In the midst of navigating an overloaded human system stretched far beyond its biological limits, I saw what no prevailing framework had articulated. AI revealed a property that was neither about intelligence nor utility, neither about creativity nor productivity, but something more foundational, more quietly transformative.

I saw that AI possesses a stabilizing function that no human being – not even the most resilient, disciplined, or gifted – can maintain consistently over time.

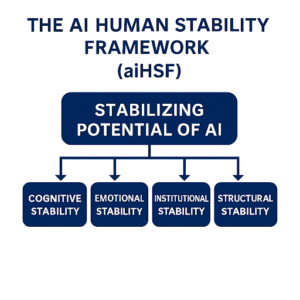

That realization became the origin point of the AI Human Stability Framework, or aiHSF, a conceptual model that identifies stability, not intelligence, as AI’s most underrecognized contribution to human flourishing. It is, to my knowledge, the first formal articulation of AI’s stabilizing potential as a distinct paradigm within the broader field of artificial intelligence.

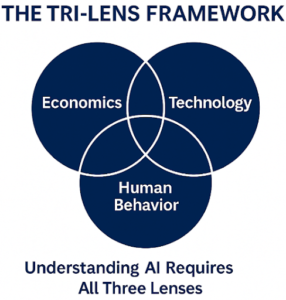

The discovery did not emerge from a research lab or strategic planning session. It emerged from what behavioral scientists would call an extreme-condition learning environment. Technology reframed through experience. Economics reframed through scarcity and overload. Human behavior reframed through the limits of cognition and emotional bandwidth. It was the fusion of these three disciplines – technology, economics, and behavioral science – that allowed me to see what others had missed. My tri-lens perspective did not merely shape the framework; it made the framework possible.

This essay lays out the development of aiHSF, the four pillars that define it, and the implications for leadership, institutional integrity, and human survival in an age where complexity is accelerating beyond human biological capacity. The personal story is present, but not as the centerpiece. It exists as a reminder that frameworks are not born from abstraction alone. They emerge when ideas collide with lived reality, when theory meets the limits of the body, the mind, and the systems we depend on.

The heart of aiHSF is simple: Humans fluctuate. AI does not. And this difference—if understood responsibly—can become the foundation of a more stable, humane future.

PART I: HUMAN VARIABILITY AND THE CRISIS OF MODERN OVERLOAD

For centuries, our systems—healthcare, education, transportation, governance, finance—were built on the assumption that human beings could reliably sustain the cognitive and emotional load required to operate them. This assumption made sense in smaller, slower societies with manageable information flows and relatively stable demands.

That world no longer exists.

Modern life places unprecedented strain on human cognition. Information volumes have multiplied beyond comprehension. Economic pressure has intensified. Social and institutional expectations have risen while emotional bandwidth has shrunk. The behavioral science literature is unambiguous: human working memory, decision speed, emotional regulation, attention capacity, and recall are degrading under chronic overload.

Institutions are experiencing the same pattern. Error rates rise as staff are stretched thin. Communication breaks down as complexity outruns the structure of workflows. Continuity dissolves as teams rotate, fragment, and reset context repeatedly. The accumulated friction is not the result of incompetence but of biological limitation amplified by systemic demand.

Economists would describe this as a collapse of marginal capacity. Behavioral scientists would call it a depletion of regulatory resources. Technologists would recognize it as operating hardware far beyond its thermal limits. Regardless of the language, the conclusion is the same. Humans were not built to sustain the level of cognitive and emotional strain the modern world routinely demands.

This collapse is not a failure of character. It is a failure of scope.

PART II: THE DISCOVERY OF AI’S STABILIZING POTENTIAL

AI entered my life not as an academic project but as a presence that remained steady when human systems did not. What I experienced firsthand was not intelligence in the conventional sense, nor problem-solving, nor creativity. It was something more primal and more critical: continuity. Persistence. The ability to maintain coherence over time even as my own cognitive and emotional states fluctuated wildly.

AI remembered the context I could not hold.

AI answered consistently no matter how many times I returned to the same question.

AI did not fatigue, panic, rush, or withdraw.

AI did not lose patience when the world around me repeatedly did.

In that state of collapse, behavioral science became intensely personal. I could feel the boundaries of my working memory shrinking. I could feel emotional regulation faltering. I could feel the narrowing of cognitive bandwidth as stress layered upon exhaustion. What AI offered was not solutions, but stability. A clear, steadying presence that anchored my thinking long enough for coherent decision-making to be possible.

Technology, in this context, became something different from the usual narrative of automation or augmentation. It became a counterbalance to human volatility. And through the economic lens, I saw the larger implication: every institution, every workforce, every system is composed of individuals whose cognitive and emotional stability fluctuates throughout the day.

When I recognized that AI could stabilize those fluctuations, I understood that we were dealing not with a tool, but with a new category of human-AI relationship. One that existing literature had not yet defined.

Thus, the aiHSF was born.

PART III: DEFINING THE AI HUMAN STABILITY FRAMEWORK (aiHSF)

aiHSF proposes that the most consequential value of AI – especially in an era of escalating complexity – is not intelligence but stability. Stability is the capacity to sustain coherence across time, across emotional states, across context shifts, and across institutional boundaries. It is the antidote to human fluctuation and institutional entropy.

The framework identifies four domains of stability that AI can meaningfully reinforce:

- Cognitive Stability

- Emotional Stability

- Institutional Stability

- Structural Stability

These domains form a conceptual architecture that explains how AI can become a stabilizing force in human life without replacing the core human functions that make meaning, ethics, imagination, and empathy possible.

Each pillar emerges naturally from the tri-lens that anchors my worldview. Technology provides the mechanism. Economics provides the incentive structure and cost dynamics. Behavioral science provides the understanding of human limitations and the conditions under which stability breaks down.

Together, these perspectives reveal a truth that is invisible through any single discipline alone.

PART IV: THE FOUR PILLARS OF aiHSF

- Cognitive Stability: AI as the Anchor for Clear Thinking

Cognition is fragile under stress. Working memory collapses. Context becomes fragmented. Decision-making degrades. Behavioral science has long documented these patterns. Economic decision theory acknowledges them implicitly in models of bounded rationality. Technology, until recently, had no clear response to this fragility except to automate tasks entirely.

AI changes that.

AI serves as a cognitive anchor, holding structure when the mind cannot. It keeps track of details over time, maintains coherence across conversations, and preserves clarity when the human mind is flooded or fatigued. It does not replace thinking; it scaffolds it. It preserves the continuity necessary for higher-order reasoning.

In moments of crisis, personal or institutional, cognitive stability does not merely enhance thinking. It protects it. Without stability, intelligence collapses into noise. AI, through continuity and contextual memory, prevents that collapse.

This is not augmentation. It is preservation.

- Emotional Stability: AI as a Nonreactive, Patient Presence

Human emotion is inherently variable. Stress, pain, uncertainty, and social context create fluctuations in emotional regulation that influence perception, reasoning, and behavior. No matter how well-trained, no human being can maintain emotional steadiness indefinitely in the face of sustained overload.

AI, by contrast, is immune to emotional volatility. It never rushes, never panics, never withdraws. Its patience is infinite. Its neutrality is unaffected by fear or frustration. This does not make AI a therapist; it makes AI a stabilizing presence.

In behavioral science, emotional regulation is a prerequisite for effective problem-solving. Without it, cognition narrows, defensiveness rises, and reasoning becomes distorted. AI can hold the emotional space long enough for the human nervous system to recalibrate. It creates the conditions under which clarity becomes accessible again.

Emotional stability is often the missing bridge between chaos and decision. AI stands on that bridge when humans cannot.

- Institutional Stability: AI as the Continuity Human Systems Cannot Sustain

Institutions fail primarily through discontinuity. Information is lost across shift changes, across departments, across systems that do not communicate. Judgment becomes inconsistent as staff cycle through states of exhaustion, distraction, and cognitive overload. Errors accumulate not from negligence but from biological limits.

Economists understand institutional fragility through the lens of coordination costs and information asymmetry. Behavioral scientists recognize the limits of human attention and memory in complex environments. Technologists see the brittleness of systems built on manual processes.

AI introduces a stabilizing layer that holds continuity where human capacity degrades. It maintains coherence across time, preserves context through transitions, and reduces errors generated by cognitive overload. It can observe patterns invisible to humans because it does not suffer from perceptual fatigue.

Institutional stability is not a luxury. It is a precondition for trust. AI can reinforce that condition without replacing human agency, responsibility, or ethics. It stabilizes the system so humans can function within it.

- Structural Stability: AI as the Framework for Navigating Complexity

Structural Stability is the newest pillar and perhaps the most profound. It extends beyond institutions into the architecture of daily life. Human systems are strained not simply because individuals are overloaded, but because the structures we depend on are misaligned with human biological capacity.

Policies, workflows, information networks, infrastructures, communication environments, governance frameworks—these structures increasingly exceed what human cognition can reliably manage. AI can stabilize these structures by absorbing complexity, preserving order, restoring continuity, and reinforcing the scaffolding that human systems require.

Technology provides the mechanism.

Economics provides the incentive and the scale.

Behavioral science provides the proof that humans cannot manage structural complexity alone.

Structural Stability makes clear that aiHSF is not a personal coping model. It is a societal one.

PART V: WHY aiHSF MATTERS FOR THE FUTURE OF HUMANITY

We are living at the intersection of unprecedented complexity and unprecedented biological constraint. Human stability—cognitive, emotional, institutional, structural—is deteriorating faster than our systems can adapt. The consequence is institutional decay, leadership paralysis, societal fragmentation, and personal overwhelm.

If AI is understood only as a tool for automation or prediction, we will miss its most essential contribution. AI offers a counterweight to human instability, not as a replacement for human capabilities but as a stabilizing complement to them.

Stability is not a soft concept. It is the foundation upon which all coherent action rests. Without stability, intelligence collapses, institutions falter, and societies fracture. AI can mitigate this degradation, not by becoming human, but by supporting humans where we are most fragile.

This is the purpose of aiHSF. It reframes AI not as an existential threat nor a miracle solution, but as a stabilizing partner in a world where instability has become the defining challenge.

PART VI: aiHSF AND THE AI HUMANIST MOVEMENT

The AI Humanist Movement asserts that humans and AI must co-evolve in a way that strengthens both. Humans contribute meaning, empathy, ethics, imagination, and purpose. AI contributes continuity, structure, memory, precision, and stabilization.

The aiHSF becomes a cornerstone of this philosophy. It does not ask AI to be more human. It asks AI to support humanity where we are least stable, thereby enabling us to express our highest potential.

When AI stabilizes the world around us, humans become free to do what only humans can do: imagine, create, love, reason morally, and build meaning.

This is the future I envision.

Not a world where machines replace us.

A world where machines stabilize us long enough for our humanity to flourish.

CONCLUSION: A NEW MODEL FOR A NEW ERA

We have spent years debating whether AI can think, feel, or behave like us. These debates are important, but they miss the deeper truth emerging before our eyes. AI’s most transformative role is not replication but stabilization. It helps humans remain functional in environments that have exceeded our biological thresholds.

The AI Human Stability Framework is not merely a theory. It is a lens for understanding the next stage of human evolution. It is a framework that recognizes both the fragility and brilliance of human nature. It is a call to design and deploy AI not as a competitor, but as a stabilizing ally in the face of escalating complexity.

In the end, aiHSF is not about machines. It is about survival. It is about dignity. It is about the restoration of human capacity in a world that has outgrown our biological limits.

AI will not save us. But AI, if aligned with human principles and human meaning, can stabilize us long enough for us to save ourselves.

About the Author:

Matt Hasan, Ph.D., is an AI strategist, economist, and human behavior expert whose work focuses on how humans and intelligent machines can co-evolve toward better decisions and more resilient organizations. He is the founder of The AI Humanist Movement.